TDD is bullshit - early testing is not

Okay blame me, dislike me, but I think TDD is just another Scrum (or communism), where people do it as a cult and whenever there are any issues "you are not doing it right". It is also similar to MLM (multi-level marketing) in that there are more talking than doing. However testing is useful. I would say it is actually more useful than you think - especially early testing your code.

What's wrong with TDD?

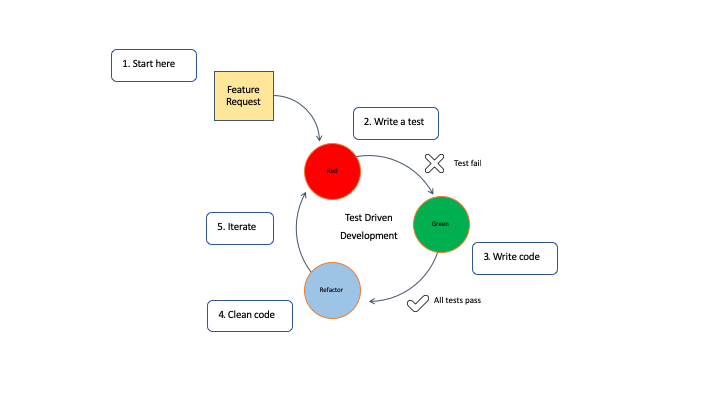

There are 5 steps in the TDD flow:

- Read, understand, and process the feature or bug request.

- Translate the requirement by writing a unit test. If you have hot reloading set up, the unit test will run and fail as no code is implemented yet.

- Write and implement the code that fulfills the requirement. Run all tests and they should pass, if not repeat this step.

- Clean up your code by refactoring.

- Rinse, lather and repeat.

Figure 1 shows these steps and their agile, cyclical, and iterative nature (I told you - they even say "agile"):

But what is wrong with this? Writing the test first is the first issue!

Why?

- You have no idea what you are doing yet, so your architecture will end up being poor and dictated by your unit test framework instead of reality.

- This teaches you not to focus on what matters - because of blindly doing things for "test coverage".

- It is guideline-based dumb programming: there are bunch of things you do not need to test unless you are a noob, but there are things where you should do even performance or valgrind analysis.

Okay - you can say you dO have at least some idea what you want to do even before coding because you did hours of whiteboard-masturbation on modules and interfaces. Fine - still the lower abstraction levels deserve better local architecture than being based on how you test! I am not saying you should not focus on writing something testable, just its wrong if tests are what "drives" your code and that it is generally just as bad to cement-in things too early.

Often you will find out while writing your solution, that your whiteboard ideas were wrong here-and-there or not wrong, but could be better. Guess what happens if you first write your tests? You make it more work to refactor your thing to the better architecture because you have cemented things into beton more with tests so change will be less likely as its more time to do and you will not feel inclined to do these refactors. So this already looks like it would only work when you have full oldschool waterfall with designing everything on paper up-front and not just the bigger architecture and know everything in advance, no changes to spec and with a miracle you would for the first try make the best architecture. Do not make it hard to change things!

I see this working worst when people also add "code coverage" and "clean code" to the equation. Clean code makes those codebases extremely fragmented into many files and many extremely short functions and some people want to literally test it all (do you remember? You cannot write code - only test first and make it fail).

Also most codebases did not have TDD cult when they started so I honestly have seen places where they hire interns just to rise unit test coverage of the codebase. By that imagine a guy whose only work is to add unit tests to increment some statistics on a website or in the IDE so management can feel safe and these guys never worked on features, just tests for months. Thankfully I never did this, but know two guys who did and it was the most painful experience and both - despite not knowing each or working at the same place - started cheating the system by just writing the simplest tests ever that made things pass. This I think is pretty usual in codebases where there was no unit testing before and now they want to add it and usual in non-faang, but big sized multinational enterprises.

The other wrongdoing is the opposite of this: They see the legacy codebase with no unit tests whatsoever and they say "we will never have any reasonable test coverage because we cannot hire interns just for this". I see this happening very often in small or mid sized enterprises. Sometimes they try to add testing for their greenfield projects though by adding tests more early on, but they also never reach any big coverage usually and that often makes them feel bad.

I actually never seen anyone ship a project with the "by the definition" TDD style though: where they write the test first and make it fail and then write the code. I tried it out of curiousity on a project and it felt as wrong as I thought it will be.

There is one more thing how TDD can fail: I saw projects where the dependencies and complications are huge and they tried to add some over-encompassing unit testing framework of choice but that got failed because of the complicated build - so now there is still not tests whatsoever. Guess what I rolled my own simple functions for testing the code and manually called them - when they finally add some framework all they need is call these functions themselves from a test. Currently these "tests" are not even running (at all) because I just used to call them from early module entry points manually, but let me tell you it was already worth it and let me explain why!

What to do instead?

Unit testing has a secret, tremendous value though that no one talks about: fast feedback loops!

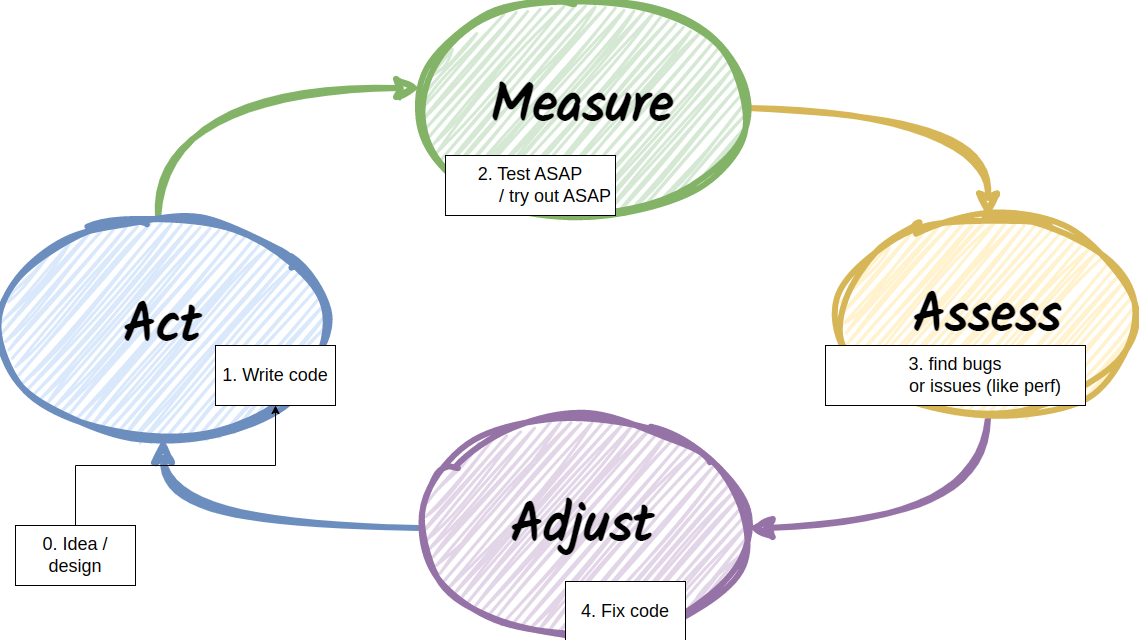

So instead of writing the tests first, let us realize that testing is a very good measurement tool (alongside prototypes) in the empirical method that is well known in- and outside of the software world!

Look I don't know about you, but I enjoy seeing results of what I am doing early so that I can spot my mistakes early. I remember how my father used to program computers with punchcards at his time at the university and they had a week between syntax errors. I also remember that when I was young once I coded for half a year in assembly (early 2000s in high school) and never saw any feedback from how this particle system would work for that amount of time. When I finally tried it I saw something and was extremely happy it is not just a black screen I see, but wrongly behaving particles so I can fix them and have some idea. I am not that young me anymore who can code something not so buggy for half a year and neither we live in my fathers uni time with punch cards and people finally ditch dynamic typing even in web so I think you can see why early feedback counts.

How is this related to testing? Let met tell you: Whenever I write anything which is at any rate complicated I write the algorithm first and instead of hooking-in that code or building further things onto it, I literally write a unit test. Of course given that the named thing is hard enough.

An example

Lets say I want to design a "collision engine" for an 3D CAD-like application and this thing is needed for a feature where you would make objects "snap" to each other like a wall snapping to the endpoint of the other. It must be fast so you come up with a complex algorithm / data structure to do this in linear time and cache the results to some grid and so on.

Lets say there is no unit testing whatsoever and you start working on this thing. It does not sound like an hour worth of work if you want to do it right and better than the competition and probably you will fail to do the engine properly anyways. So you want to test thing thing as soon as possible - much earlier than you could hook this all together with the real target functionality.

Unit tests let you run parts of this complex thing with simple data and it lets you find your bugs much faster. Also if you have a separate unit test build or a little prototype project that only does this, you can do all kinds of things that you normally cannot do with your full application: for example you can run valgrind on this test binary and remove your memory leaks if there is any.

Another example is a special map-like data structure where I literally have a separate test where I tried the "growth" of the data structure earlier than the whole data structure was ever finished! So you can get creative and try small parts of your code - the tricky, hard or complex parts!

Well and one more thing: I can literally just call my unit test for the "growth" of the map-like data structure from a loop and thus write not just unit, but performance test for a smaller subset of the problem and showing where are cache misses!

What makes things easy to test and try out early?

I can tell you in one word: modularity!

Probably you have heard about "mocking" if you heard about TDD. Mocking is the root of all evil in my opinion because it prohibits you realizing the really good architecture.

I would say if you want to do unit testing and you feel the need to mock something - probably it is the best indicator that your architecture is bad. The processing of data should be separated from the data sources. Work on data only please, do not mix together your data access and your processing!

Code that sucks:

public void ProcessLog(Log log)

{

FileWriterManagerFactoryBuilder

.CreateFactory()

.Write(log.toStream());

log.processed = true;

}

public void ProcessLogs()

{

auto logz = MyLogManagerFactory.Create();

for(const log of logz.getList())

{

Workset.ProcessLog(log);

}

}

More chad-style code:

public static LogStream ProcessLog(Log log) {

auto st = log.toStream();

log.processed = true;

return st;

}

public static void ProcessLogs(LogAccessor logz) {

for(const log of logz) {

WorkSet.ProcessLog(log);

}

}

public static LogAccessor GetLogs() {

return MyLogManagerFactory.CreateAccessor();

}

public static void Do() {

const logz = WorkSet.GetLogs();

WorkSet.ProcessLogs(logz);

}

Why is this better? You can literally test your processing even without needing to write the data access, but more importantly this is easy to unit test, to prototype, move your little processing into a separate header file and write a simple entry point, whatever. It is just better in all ways. And if you have the above code or similar and think about what mock framework to use, just do this refactor instead and you will thank me for it.

You should totally learn to automatically write the latter style because its objectively better.

One more thing: beware of too much "information hiding":

- In da school you will learn that making things private is good - but its not!

- It is not always that good, for example it makes testing harder unless you add test code to your own class / use reflection.

- Making things protected is better: You can inherit from your class to test in your test codes and access these.

- But I would advise against OOP and inheritance too, so its best that you can just access what is relevant, like in your test build you do a

#define private publicand you include this in your various files with your unit test "lib".

This enables your tests to be much more whitebox: Do you have a complex data structure and you know how its internals work and want to test if they are what they are? This enables you more than a debugger does (but also enables you to look more closely with debuggers / perf tools).

What about coverage?

I honestly thing coverage does not really matter. It just leads to guideline-based programming instead of using your brain cells and that is awful for your product and your growth as a developer. If you use testing to try out things as early as possible, you will naturally test the really tricky parts anyways, you will test the proper places.

But lets say you do not really have any "tricky" part and you think all your code is very, very boring business stuff. First of all sorry for you, but secondly I see those as more buggy products than the "hard things". So probably you want to do something and I think the best is that you just add tests for bugs that come out and are found: at least this way the same ones reported by your users will not be coming back at you all the time.

When there is no help?

Sadly there are times where testing is nearly impossible:

- Heavily multithreaded, async stuff

- Interop-heavy things

When doing the first you often better "read out" the source code similar to how you read out a book and just be experienced. I had worked with heisen-bugs which only appeared on special windows server versions (specifically to which update they had) and only like 3 times per year they occured. Good luck testing that in any way. Btw it was because someone used a 16bit old API from threads to read a shared resource from INI files and things just sometimes lock up. It was legacy code last touched 8 years before and widely used in a software asset management solution all over the globe.

What about interop-heavy things? I currently work on an IFC exporter to a product that does not support that file format yet. This on paper sounds very testable, but we not only want an IFC file that is "according to the open spec", but one that opens up "properly" in certain versions of REVIT. Now that software supports random subset of the file format and silently stops processing parts of the file if it contains things they do not do properly yet. Good luck "testing" this in any way whatsoever except me exporting files, firing up my only windows machine in my whole company instead of my cozy arch linux and see if things are going well - sadly when they are not going well it might not immediately be reported and often not at all telling what is wrong. Also sometimes I had to write non-standard things just because they liked that way better. Again. Good luck - but these are unwanted situations.

TDR: Test-driven-refactoring

So TDD is not that great and despite probably you can do it in toy projects to learn about what is testable code and what is not as a good excercise - it does not give you real benefits. However I know an area where writing tests first often does: doing "TDR".

On these really legacy projects, if you need a huge refactor on something that has clearly defined interfaces, you can first write a bunch of tests to basically "record" how the original code works (it is a tool for understanding / measurement - you see?) and then do your refactor of legacy code. This is actually a good trick in those cases, so test-driven-refactoring is beneficial, yet I will not tell you "always do this" or such cultish words, just saying it often helps.

TL;DR

So what are the key take-aways?

- Write your code first and think out a good architecture for your problem.

- TDD is useless, but in legacy codebases TDR (test driven refactoring) is useful to know about!

- Try to not cement-in (with tests) too much of your architecture before your write code for it!

- Basically NEVER-EVER do any mocking or use mock frameworks.

- But write modular code: do not dependency inject services, just work DATAIN -> DATAOUT in your processing so its testable

- Test complex things or prototype complex things as early as possible - even before they are completed / hooked in.

- Do not care for coverage: early feedback helps you even if you currently are 1% coverage. Just start testing right now!

- Do not necessarily wait your build team to add some test framework: just write it as a regular function and call it until then.

- Use your brain - do not work as if brain-dead guidelines is all you follow.

In other words testing is extremely useful, TDD sucks but testing and trying out things as early as possible does not!

I also have youtube now!

I also have a youtube channel nowadays about hiperf code optimization, linux and other cool things. There are both english and Hungarian videos so I give some examples here:

En:

Hu:

Also don't forget the "see old/all posts" button to see old radeon kernel driver fixes and things :-)

Tags: opinion, TDD, testing, quality, clean-code, continous-delivery